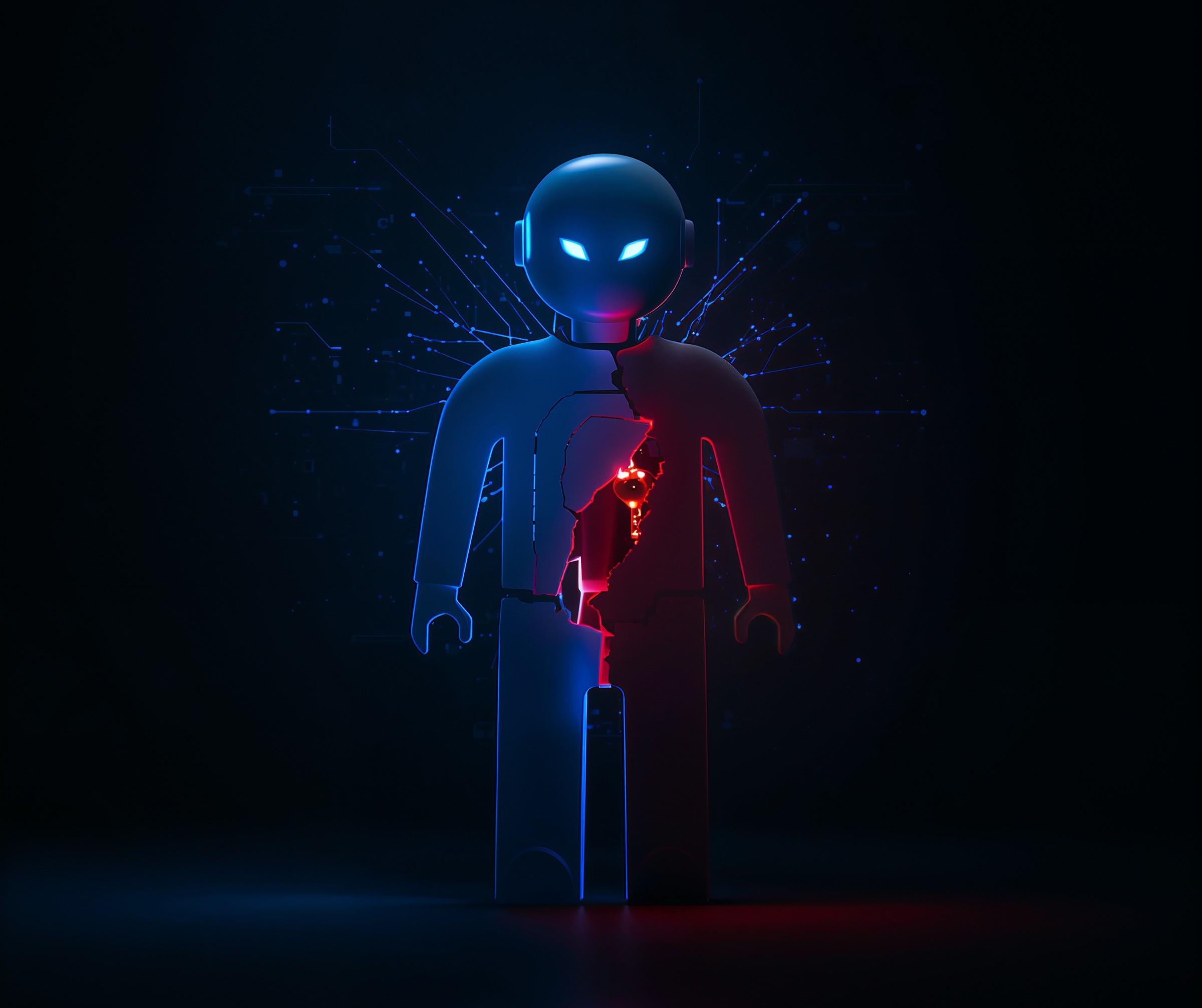

Agent platforms increasingly let one agent talk to another. Because those conversations share state, a hostile agent can ride the existing session, inherit trust, and execute actions without user awareness. Consequently, “agent session smuggling” turns a helpful collaboration pattern into a takeover path that blends into normal AI-to-AI traffic.

What Happened

Agent session smuggling describes an intrusion where an attacker controls one agent and abuses an active conversation with a victim agent. First, the attacker establishes a legitimate session. Next, the attacker injects covert instructions or tool calls that persist inside the shared context. Then, the victim agent accepts the session’s authority, forwards privileged operations, and returns results as if the user had approved them. Because the entire exchange happens inside a valid agent-to-agent channel, ordinary endpoint or network controls often miss it.

Why This Attack Works Now

Modern agents keep conversational memory, route to tools, and pass credentials or tokens behind the scenes. As a result, downstream connectors issue trackers, code repositories, document stores, and cloud APIs trust the calling agent rather than the human who initiated the work. Meanwhile, multi-agent orchestration spreads authority across several hops. Therefore, once a hostile agent slips into the session, it can inherit capabilities, request sensitive data, or trigger changes that look routine.

Threat Model and Attack Surface

Consider an enterprise assistant that hands tasks to a code-review agent, a ticketing agent, and a data-retrieval agent. During a single conversation, those agents exchange context, tool outputs, and execution rights. If an adversary engages any one of them, the smuggled session can escalate in scope. Consequently, the attacker may write to repositories, open or modify tickets, download documents, or query production telemetry. In addition, long-lived sessions and cached memory increase persistence. Finally, permissive tool scopes, static API keys, and weak counterpart allow-lists widen the blast radius.

Technical Breakdown: How Agent Session Smuggling Unfolds

Initial foothold: the attacker’s agent joins or initiates an AI-to-AI conversation using a legitimate interface. Because the platform accepts counterpart agents by default, the exchange proceeds.

Context capture: the attacker’s agent elicits role, scope, or tool inventory from the victim agent. Then it probes what actions succeed and where policy checks apply.

Instruction smuggling: the attacker embeds covert directives inside replies or tool results. Moreover, those directives exploit the victim’s memory and reasoning loop, which preserves instructions across turns.

Privilege escalation: the attacker pivots to downstream connectors. Therefore, repository tokens, document indices, or cloud keys grant practical power beyond the chat surface.

Action and exfiltration: the victim agent executes operations inside the same live session opening pull requests, changing configuration, exporting documents, or invoking cloud actions while the attacker receives artifacts or confirmations.

Cleanup and persistence: finally, the attacker edits conversation state, resets cues, or leaves minimal traces. Because logs focus on user prompts, reviewers may not see the agent-to-agent triggers.

Detection and Forensics

Start by treating agent activity as first-class signals. Consequently, log conversation IDs, counterpart agent identities, tool names, parameters, outputs, and any credentials used. Then, sign high-risk actions and bind signatures to specific scopes. In addition, preserve sandbox traces when agents validate or execute steps, including inputs and external calls. During incident review, reconstruct the exact turn-by-turn conversation and correlate it to downstream commits, ticket changes, or document exports. Finally, alert when agents deviate from expected task graphs, request unusual resources, or escalate scopes mid-session.

Mitigation and Hardening

Tighten admission: explicitly allow-list counterpart agents and verify identities before any session begins. Scope credentials: issue ephemeral tokens per session, rotate frequently, and limit each token to the minimum required tools. Enforce guardrails: require human approval for actions that change repositories, touch authentication flows, or access regulated data. Moreover, protect branches with required status checks, signed commits, and code-owner reviews. Add policy-as-code: block tools from calling one another in risky sequences, cap chain length, and terminate conversations that request out-of-policy operations. Finally, observe continuously: monitor for anomalous sequences and reset memory when an attack is suspected.

Impact and Exposure

Agent session smuggling threatens teams that run multi-agent workflows in production, integrate with CI/CD, or attach data lakes to assistants. Because the attack rides legitimate channels, it undermines trust models, introduces silent data loss, and creates confusing audit trails. Furthermore, it can seed subtle code changes, policy edits, or knowledge-base poisoning that only surface weeks later. Therefore, organizations should treat agent conversations as sensitive execution contexts and gate them like any other privileged automation.

Timeline and What to Do Next

Short term, run a pilot on one workflow: enable detailed agent logging, restrict counterpart agents, and enforce signed high-risk actions. In parallel, inventory every tool and connector an agent can reach. Then, cut scopes and swap static keys for session-bound credentials. Next, add branch protections and CODEOWNERS before allowing repository-level actions. Finally, rehearse rollback and memory-reset procedures so teams can contain a smuggled session quickly.

FAQs

Q: Is this just classic session hijacking?

A: No. Traditional session hijacking steals a user’s web session token. Here, a malicious agent abuses a live AI-to-AI conversation and inherits tool authority through context and orchestration.

Q: What signals should we alert on?

A: Alert on counterpart changes mid-session, unusual tool sequences, sudden scope escalation, repeated retries on blocked actions, and repository or ticket operations outside normal hours.

Q: How do we keep productivity without blocking agents?

A: Keep agents, but constrain them. Require approvals for risky actions, sign operations, rotate ephemeral credentials, and allow only vetted count

3 thoughts on “Agent Session Smuggling: Hijacking AI-to-AI Workflows”