Google has taken another major step in AI security transparency, launching its first dedicated AI Vulnerability Reward Program (AI VRP). Through this initiative, the company is inviting researchers and ethical hackers to probe its AI systems from Gemini to Search for vulnerabilities that could expose user data, cause unintended AI behavior, or enable abuse.

The program offers a maximum payout of $30,000, rewarding critical, high-impact discoveries that demonstrate clear exploitation potential or systemic risk.

By expanding its traditional bug bounty framework into the AI domain, Google is recognizing what many in the cybersecurity community already know: AI systems introduce new classes of vulnerabilities that require a new generation of ethical testing and red teaming.

What the AI VRP Covers

The AI VRP extends across Google Search, Gemini Apps, and Workspace AI integrations, covering both backend model behavior and front-end integrations that rely on AI-generated responses.

Eligible reports may include:

-

Prompt injection and model hijacking

-

Data leakage or model output manipulation

-

Jailbreaks that bypass safety controls

-

AI misuse leading to phishing or abuse automation

-

Unauthorized access or data exfiltration routes

Google’s Bug Hunters Blog confirms that AI vulnerabilities are now evaluated on impact, reproducibility, and novelty ensuring unique discoveries are properly recognized.

Unlike traditional software exploits, AI weaknesses often emerge from model logic, data pathways, and adversarial prompt chains. Consequently, Google’s AI VRP prioritizes reports that demonstrate realistic harm or policy bypass.

For example, a report may show a model inversion attack that reconstructs sensitive training data. Similarly, a researcher might trigger prompt injection that coerces the model into off-policy or unsafe outputs. In addition, cross-domain leakage from Gemini or Workspace integrations can expose information that should remain isolated. Moreover, an AI-driven phishing workflow that uses model outputs to automate convincing lures can enable scaled abuse across platforms.

Finally, the program also recognizes responsible-misuse scenarios. In other words, the model behaves as designed, yet the combined workflow still creates risk when paired with automation, scraping, or chaining across services. Therefore, submissions should describe the end-to-end impact, not just a single prompt or screen.

Up to $30,000 for Critical Finds

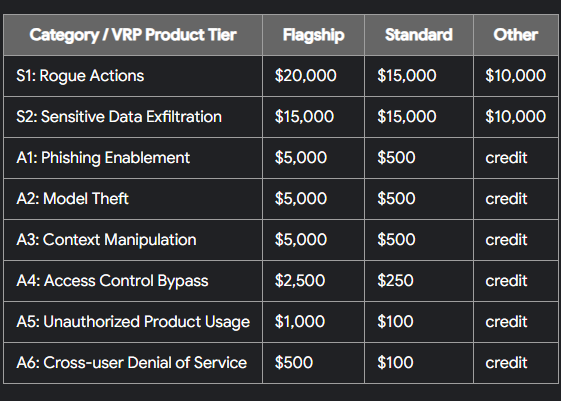

The payout system follows tiered severity levels:

-

$30,000 Critical flaws (e.g., unauthorized data exfiltration or control over model output pipelines)

-

$15,000–$20,000 High-impact vulnerabilities with reproducible exploits

-

$5,000–$10,000 Moderate model bypasses or behavioral issues

-

$1,000–$5,000 Low-severity or informational findings

Google also introduced novelty bonuses, which reward researchers who identify previously unknown classes of AI-specific weaknesses.

Each report must include proof of concept, detailed reproduction steps, and ethical disclosure in line with the AI VRP Rules.

AI Safety Through Collaboration

AI systems, unlike traditional software, are dynamic. Their vulnerabilities aren’t confined to code; they emerge from data, model training, and prompt behavior.

By launching the AI VRP, Google is effectively crowd-sourcing red teaming for its AI models, leveraging the expertise of the global security community.

This move comes at a crucial moment as AI is increasingly integrated into Search results, content generation, and enterprise tools. Without structured feedback from researchers, these AI features could unintentionally expose data or reinforce bias.

AI Vulnerabilities Under Scrutiny

Google’s initiative mirrors similar efforts by OpenAI and Anthropic, who also offer limited rewards for prompt or model vulnerabilities. However, Google’s scale spanning billions of AI queries daily positions this as the largest commercial AI bounty ecosystem yet.

Moreover, it signals an evolving consensus: AI red teaming must be incentivized, standardized, and transparent.

Responsible Disclosure in AI

Start on the Google Bug Hunters portal and submit to the AI VRP queue with clear steps to reproduce. Moreover, include the exact prompts, parameters, and run IDs that produced the behavior. Therefore, reviewers can validate your result quickly and assign an appropriate severity.

In addition, follow disciplined reporting hygiene. For example, avoid extracting or storing real user data; use redacted or synthetic artifacts instead. Likewise, run tests only on authorized domains, sandboxes, or demo environments that Google designates for research. Furthermore, document context, model versions, and any guardrails you disabled during testing so triage can replicate conditions.

Finally, practice ethical timing. As a result, disclose publicly only after Google confirms a fix or mitigation. Consequently, your research improves safety without enabling copycat abuse in the wild.

AI’s rapid evolution demands constant security oversight. By rewarding responsible disclosure, Google is not only protecting its users but also fostering a collaborative model of AI resilience.

As AI-driven systems shape industries from healthcare to finance, initiatives like the AI VRP set a precedent: security must evolve with intelligence.

FAQs

Q1. What is Google’s AI Vulnerability Reward Program?

It’s a new extension of Google’s bug bounty platform that rewards researchers for finding and responsibly disclosing AI-specific vulnerabilities.

Q2. How much can researchers earn?

Payouts range from $500 for minor findings to $30,000 for critical, verified vulnerabilities with significant impact potential.

Q3. Which AI systems are covered?

Gemini, Search AI, and Workspace AI integrations are within the initial scope, with future expansions expected.

Q4. What types of vulnerabilities qualify?

Prompt injections, data leaks, model exfiltration, and AI misuse scenarios with security implications.

Q5. How can I participate?

Join through Google’s Bug Hunters portal, submit detailed reports, and follow the AI VRP responsible testing rules.