Inside Switzerland’s Quantum-Ready Satellite Strategy for Defence

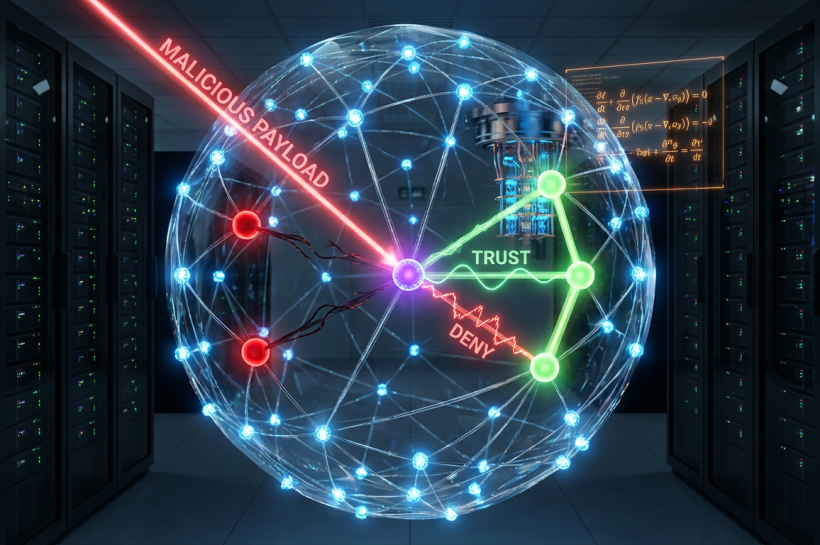

Quantum encryption promises stronger security, yet it also strains satellite hardware, bandwidth and mission design. Switzerland’s Armed Forces now redesign their space architecture to handle quantum-era threats, “harvest-now, decrypt-later” campaigns and the limits of legacy spacecraft.